About Me

I am currently working at Zhipu AI, where I lead the O Team focusing on Reasoning RL, RL infra and Agentic RL for the GLM model family, including GLM-4.5, GLM-4.6, Z1, and related models. Our team develops and maintains slime, an open-source RL framework for efficient RL training.

Outside reinforcement learning, my earlier work centered on long context training techniques and the training of long context models. More recently, I have been exploring sparse attention architectures and other emerging approaches for extending model context efficiently.

I completed both my Ph.D. and B.S. in the Department of Computer Science at Tsinghua University, under the supervision of Prof. Juanzi Li. My broader goal is to advance our understanding of reasoning, long context computation, and training methodologies that support more capable and reliable AI systems.

Publications & Preprints

2022

The list of papers is no longer updated, More details can be found on my Google Scholar.

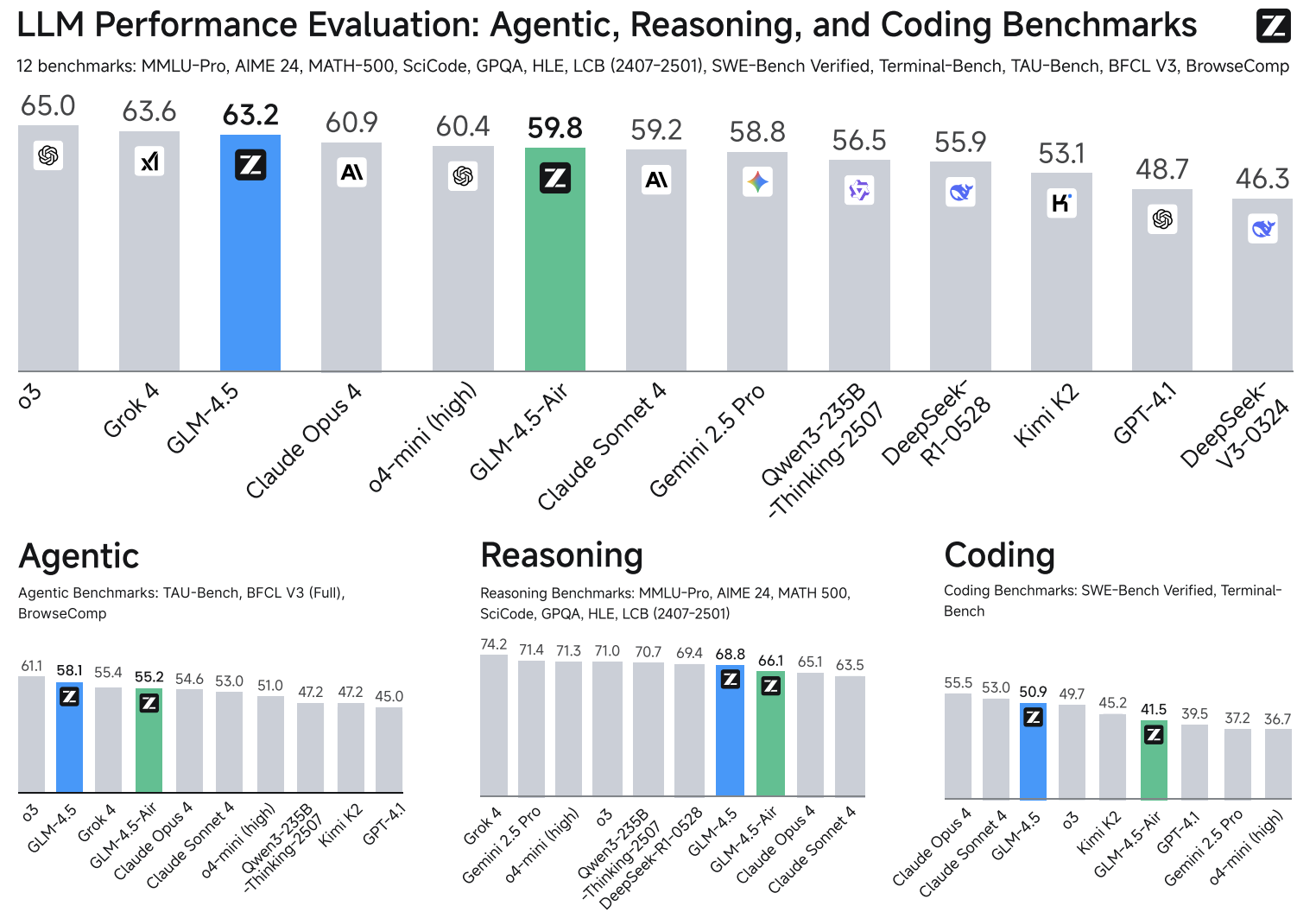

| GLM-4.5: Agentic, Reasoning, and Coding (ARC) Foundation Models Arxiv 2025 Aohan Zeng, Xin Lv, Qinkai Zheng, Zhenyu Hou, Bin Chen, Chengxing Xie, Cunxiang Wang, Da Yin, ... [PDF] [Code] We present GLM-4.5, an open-source Mixture-of-Experts (MoE) large language model with 355B total parameters and 32B activated parameters, featuring a hybrid reasoning method that supports both thinking and direct response modes. |

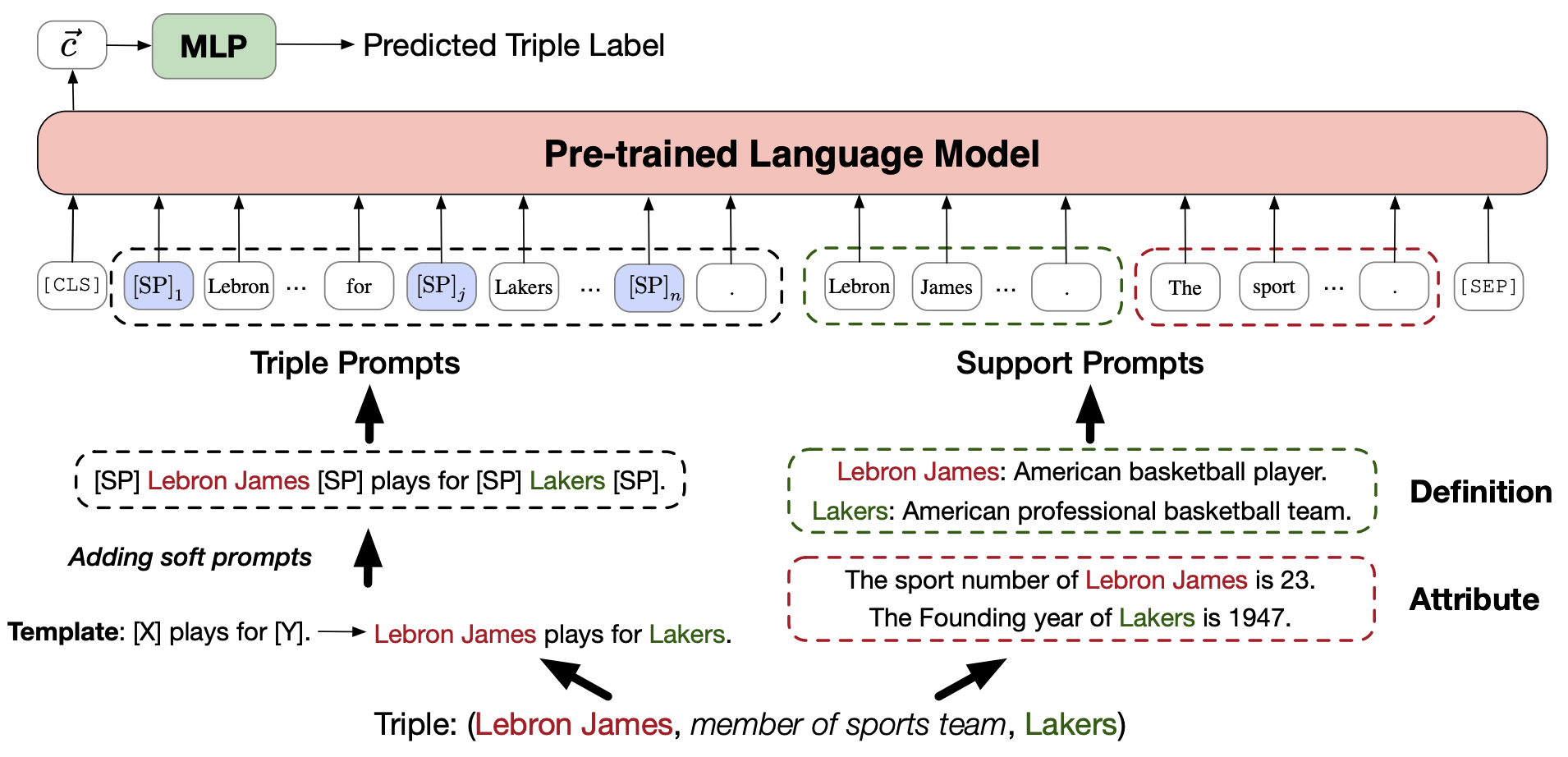

| Do Pre-trained Models Benefit Knowledge Graph Completion? A Reliable Evaluation and a Reasonable Approach ACL 2022 Findings Xin Lv, Yankai Lin, Yixin Cao, Lei Hou, Juanzi Li, Zhiyuan Liu, Peng Li, Jie Zhou [PDF] [Code] We highlight a more accurate evaluation setting OWA and propose a novel PLM-based KGC model named PKGC. In our experiments, we verify that CWA cannot bring accurate evaluation results. |

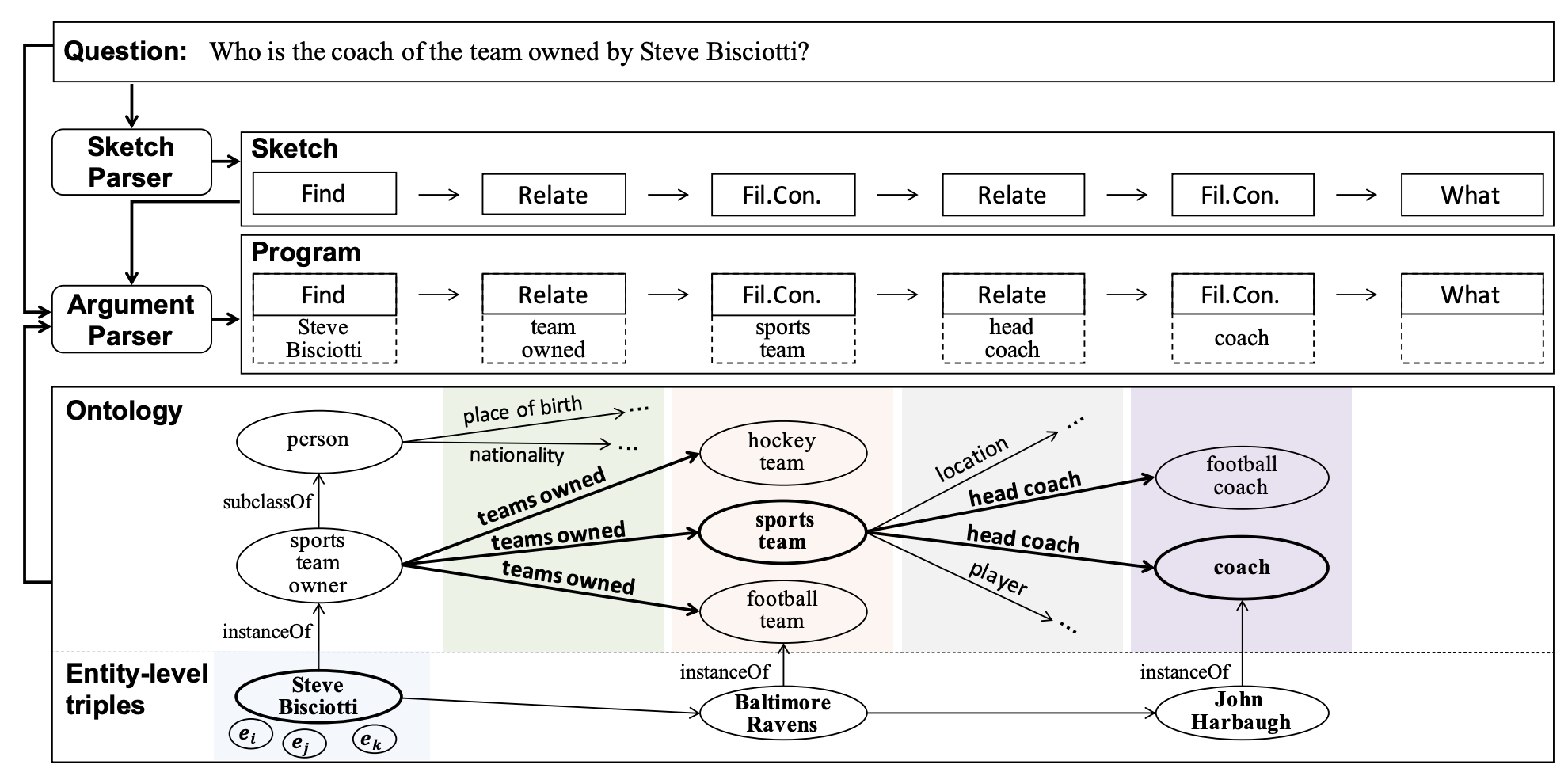

| Program Transfer for Answering Complex Questions over Knowledge Bases ACL 2022 Shulin Cao, Jiaxin Shi, Zijun Yao, Xin Lv, Jifan Yu, Lei Hou, Juanzi Li, Zhiyuan Liu, Jinghui Xiao [PDF] [Code] We propose the approach of program transfer, which aims to leverage the valuable program annotations on the rich-resourced KBs as external supervision signals to aid program induction for the low-resourced KBs that lack program annotations. |

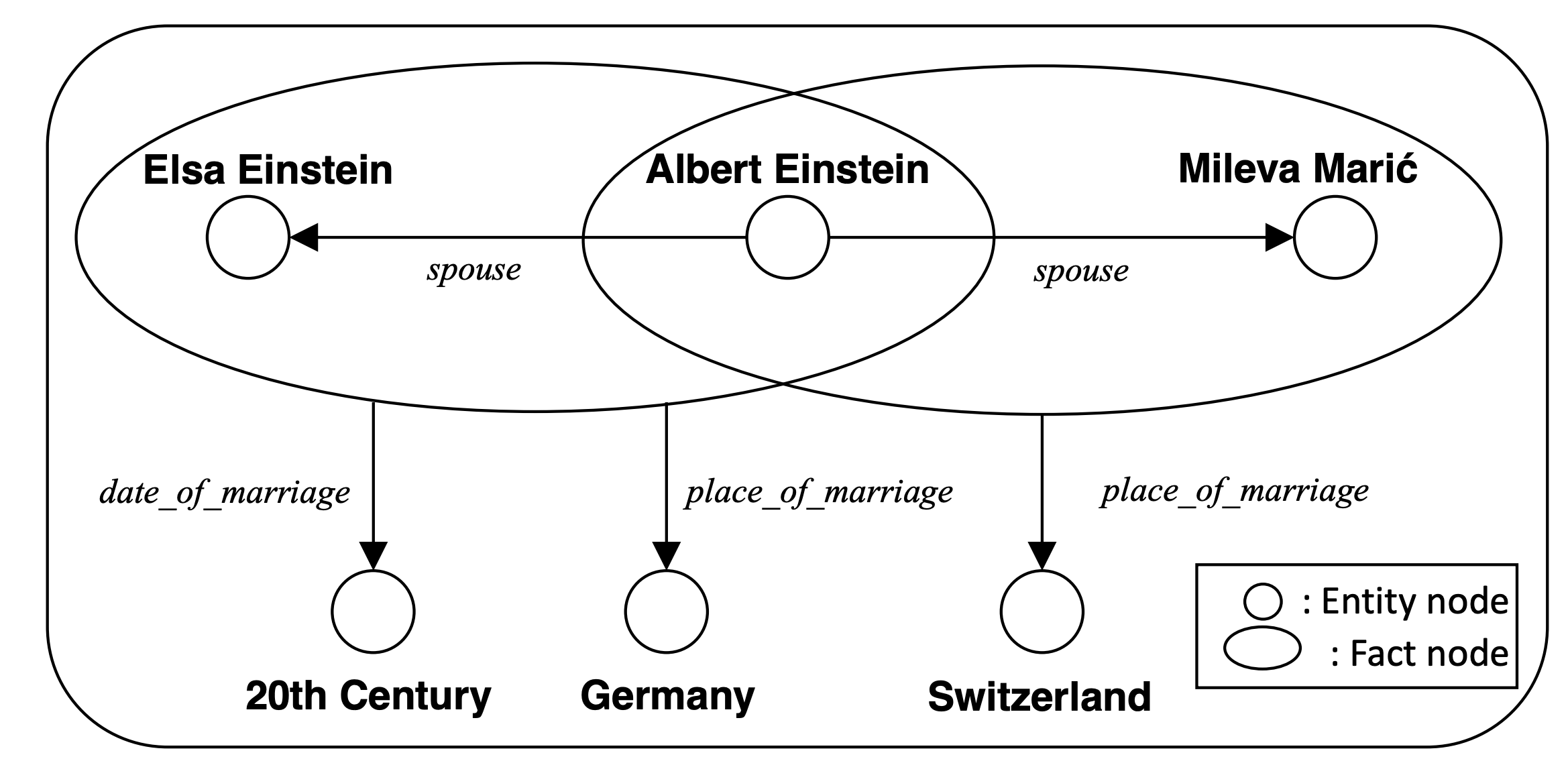

| Triple-as-Node Knowledge Graph and Its Embeddings DASFAA 2022 Xin Lv, Jiaxin Shi, Shulin Cao, Lei Hou, Juanzi Li [PDF] [Code] We contribute a benchmark WD16K with additional fact-relevant relations, and a framework FactE, which can represent facts, entities and relations in the same space via attention. |

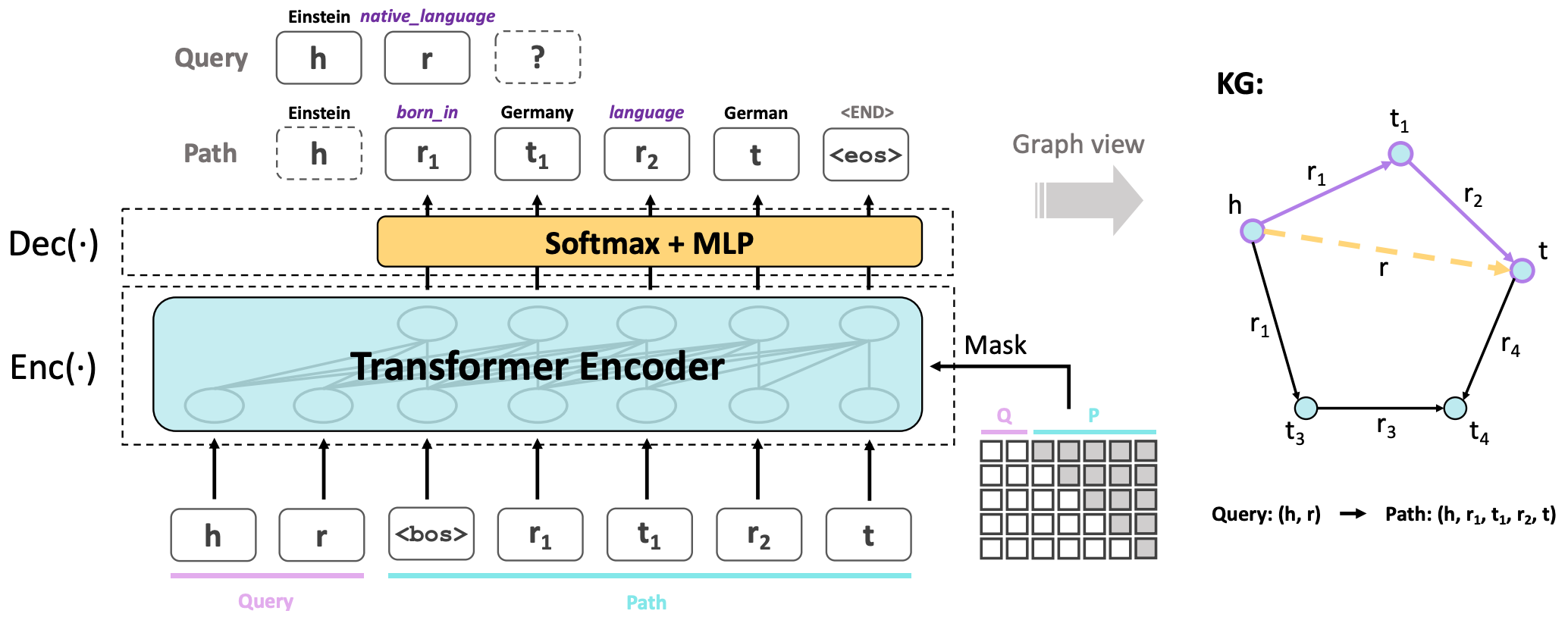

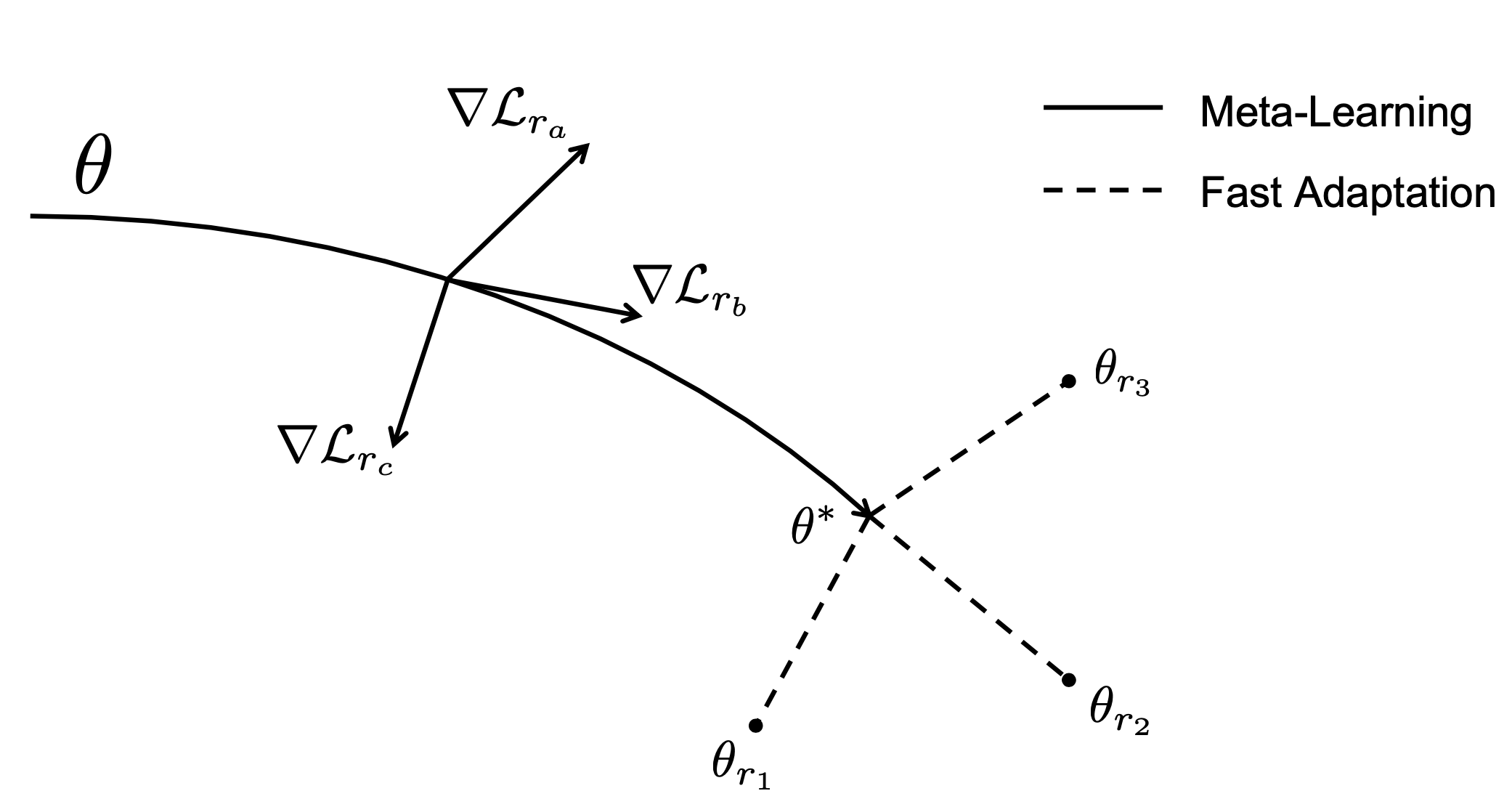

| SQUIRE: A Sequence-to-sequence Framework for Multi-hop Knowledge Graph Reasoning Arxiv 2022 Yushi Bai, Xin Lv, Juanzi Li, Lei Hou, Yincen Qu, Zelin Dai, Feiyu Xiong [PDF] [Code] SQUIRE treats the triple query and the evidential path as sequences and utilizes Transformer to learn and infer in an end-to-end fashion. We propose rule-enhanced learning and iterative training to further boost performance. |

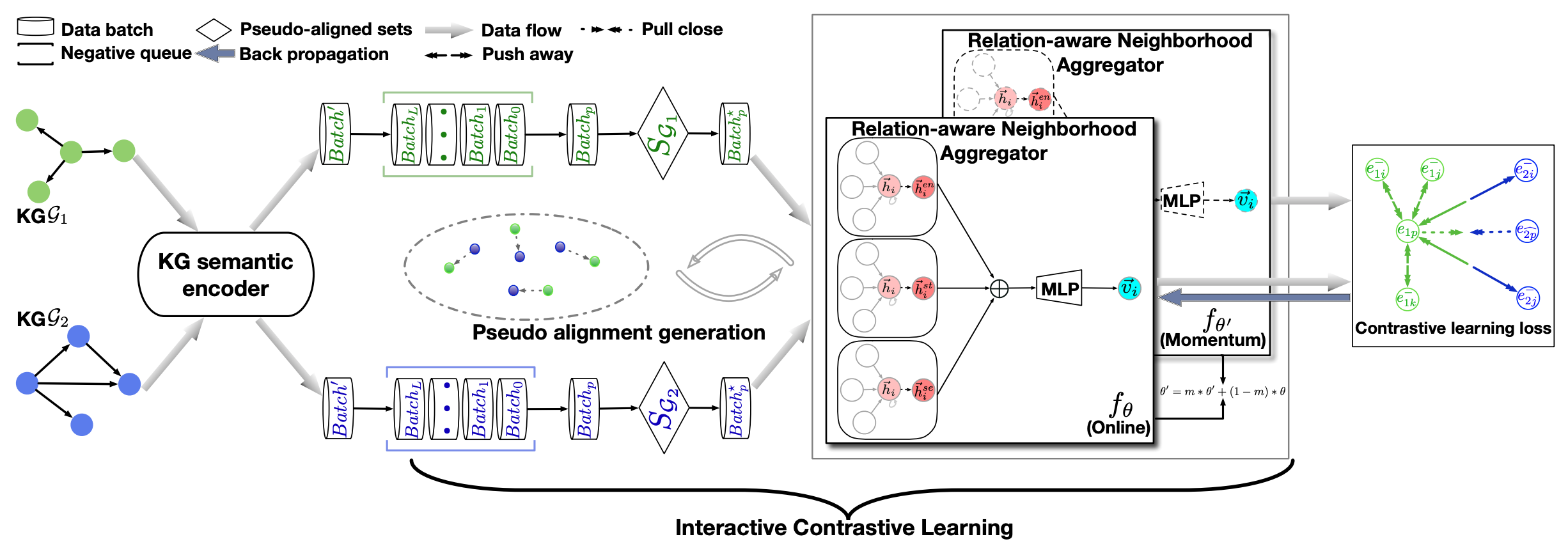

| ICLEA: Interactive Contrastive Learning for Self-supervised Entity Alignment Arxiv 2022 Kaisheng Zeng, Zhenhao Dong, Lei Hou, Yixin Cao, Minghao Hu, Jifan Yu, Xin Lv, Juanzi Li, Ling Feng [PDF] [Code] We propose an interactive contrastive learning model for self-supervised EA. The model encodes not only structures and semantics of entities (including entity name, entity description, and entity neighborhood), but also conducts cross-KG contrastive learning by building pseudo-aligned entity pairs. |

2021

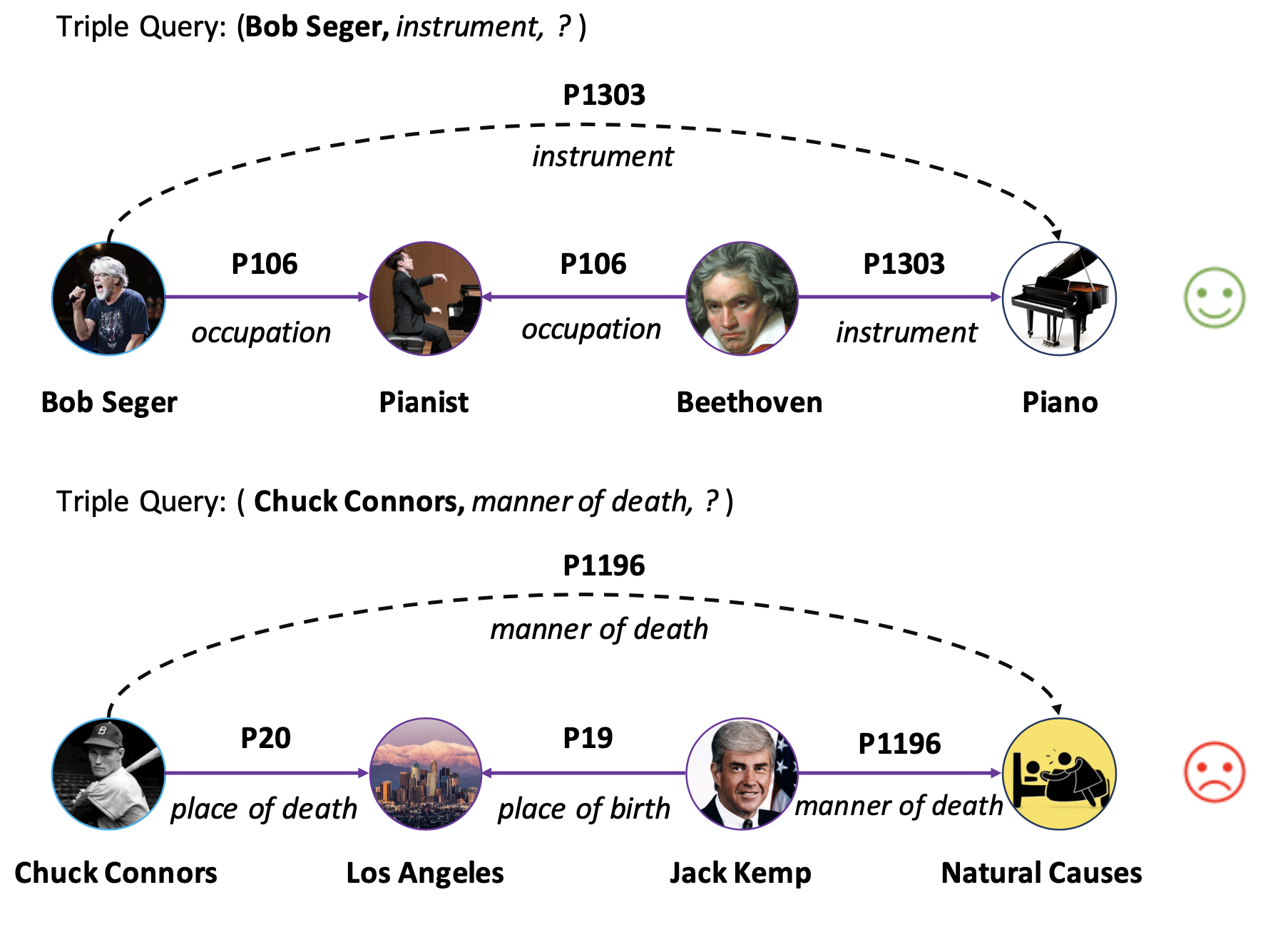

| Is Multi-Hop Reasoning Really Explainable? Towards Benchmarking Reasoning Interpretability EMNLP 2021 Xin Lv, Yixin Cao, Lei Hou, Juanzi Li, Zhiyuan Liu, Yichi Zhang and Zelin Dai [PDF] [Code] We propose a framework to quantitatively evaluate the interpretability of multi-hop reasoning models. Based on this framework, we annotate a dataset to form a benchmark that can accurately evaluate the interpretability. |

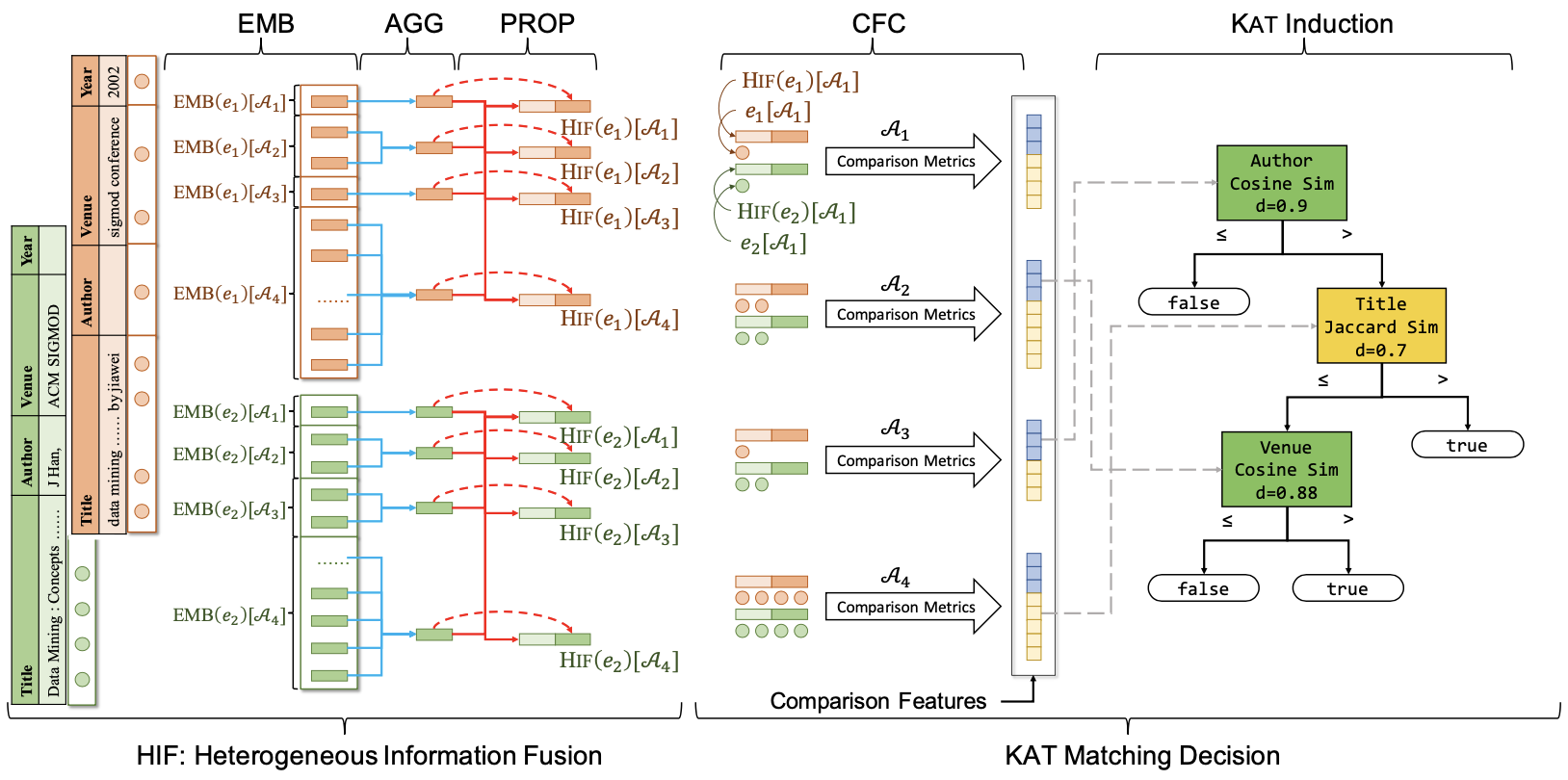

| Interpretable and Low-Resource Entity Matching via Decoupling Feature Learning from Decision Making ACL 2021 Zijun Yao, Chengjiang Li, Tiansi Dong, Xin Lv, Jifan Yu, Lei Hou, Juanzi Li, Yichi Zhang and Zelin Dai [PDF] [Code] We propose to decouple the representation learning stage and the decision making stage to fully utilize unlabeled data for entity matching task. |

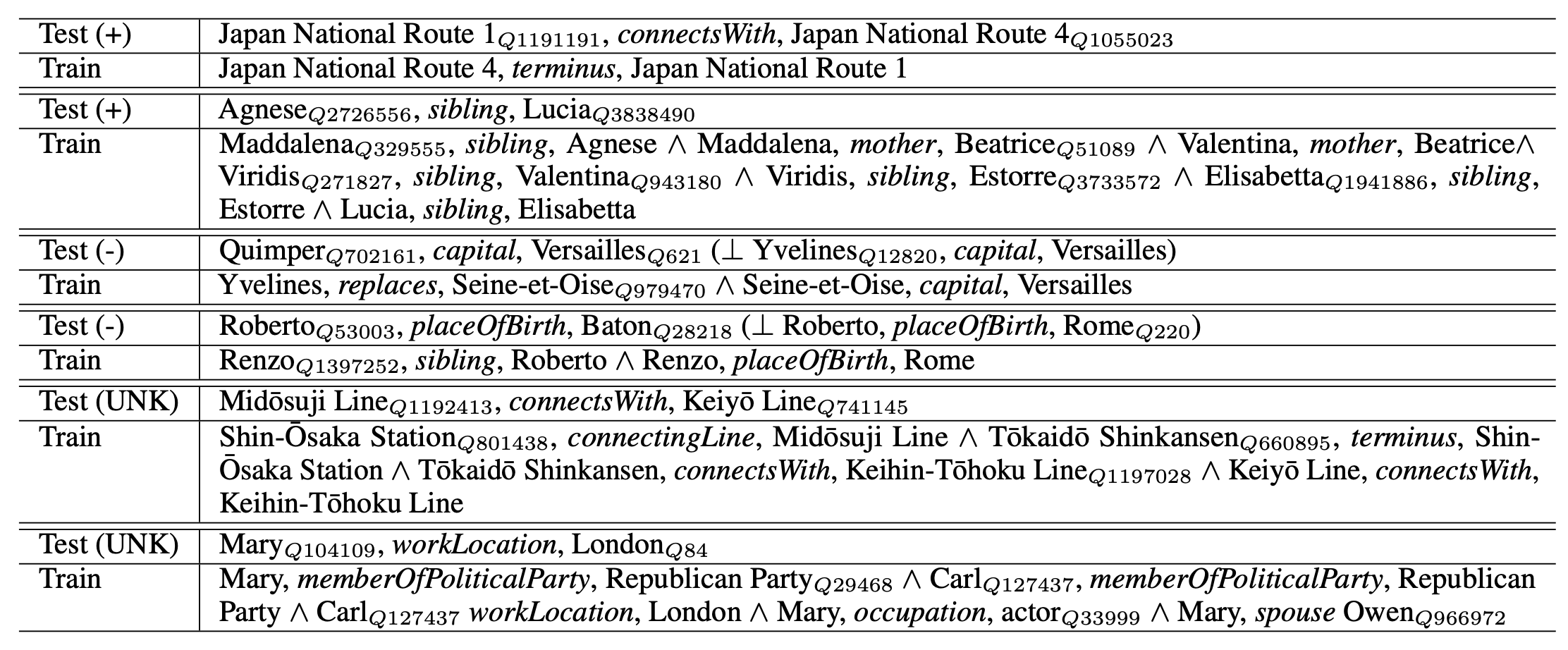

| Are Missing Links Predictable? An Inferential Benchmark for Knowledge Graph Completion ACL 2021 Yixin Cao, Xiang Ji, Xin Lv, Juanzi Li, Yonggang Wen and Hanwang Zhang [PDF] [Code] We highlighted three principles for KGC datasets: inferential ability, assumptions, and patterns, and contribute a large-scale dataset InferWiki. We established a benchmark with three types of seven KGC models on two tasks of triple classification and link prediction. |

| KACC: A Multi-task Benchmark for Knowledge Abstraction, Concretization and Completion ACL 2021 Findings Jie Zhou, Shengding Hu, Xin Lv, Cheng Yang, Zhiyuan Liu, Wei Xu, Jie Jiang, Juanzi Li and Maosong Sun [PDF] [Code] We focus on the problems of knowledge abstraction, concretization, and completion. We propose a benchmark to test the abilities of models on KACC. |

2020

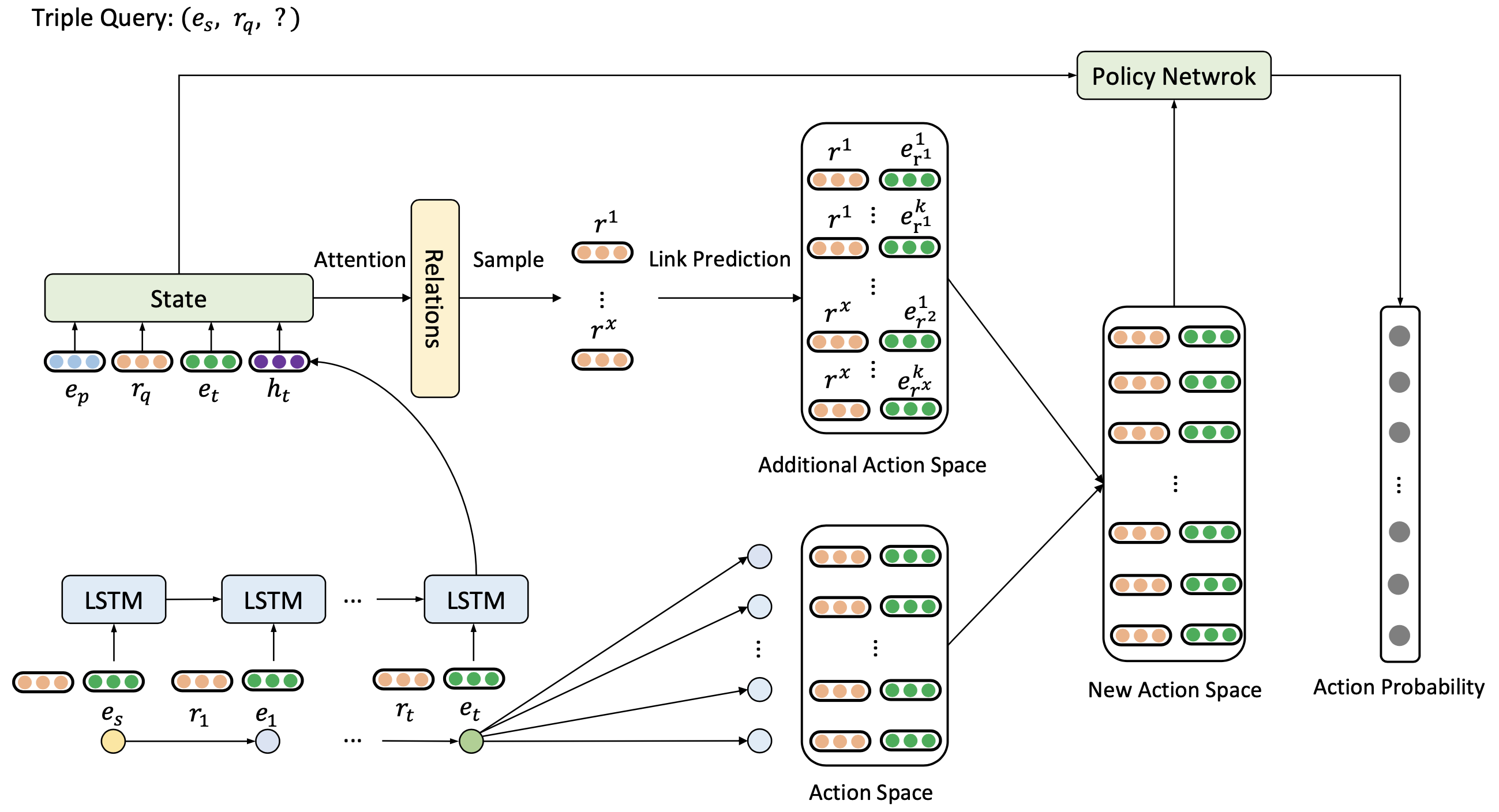

| Dynamic Anticipation and Completion for Multi-Hop Reasoning over Sparse Knowledge Graph EMNLP 2020 Xin Lv, Xu Han, Lei Hou, Juanzi Li, Zhiyuan Liu, Wei Zhang, Yichi Zhang, Hao Kong, Suhui Wu [PDF] [Code] We propose a reinforcement learning model named DacKGR with two strategies (i.e., dynamic anticipation and dynamic completion) designed for sparse KGs. These strategies can ease the sparsity of KGs. |

2019

|

2018

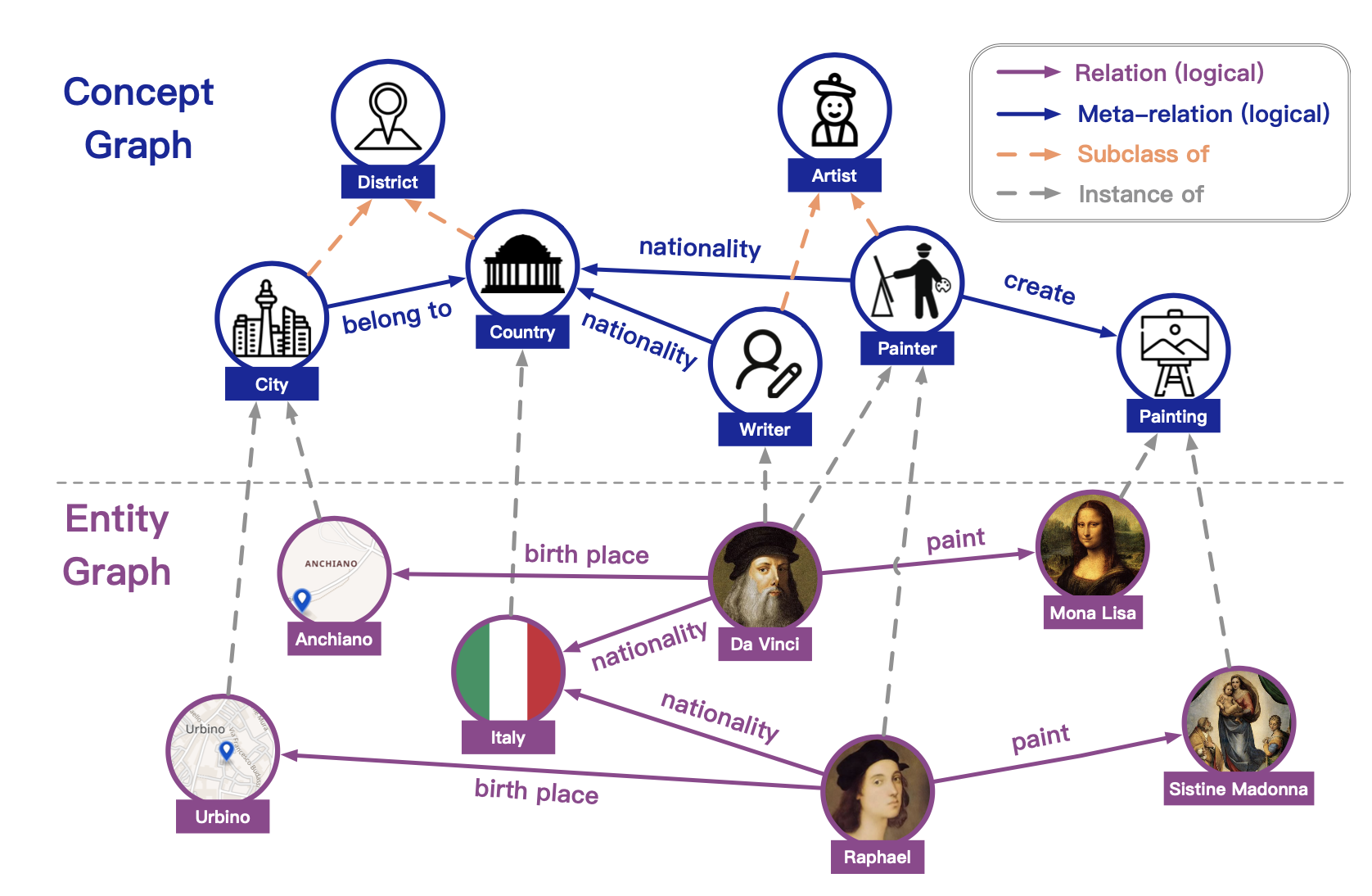

| Differentiating Concepts and Instances for Knowledge Graph Embedding EMNLP 2018 Xin Lv, Lei Hou, Juanzi Li, Zhiyuan Liu [PDF] [Code] We propose a new knowledge embedding model named TransC. TransC embeds instances, concepts, and relations in the same space to deal with the transitivity of isA relations. |

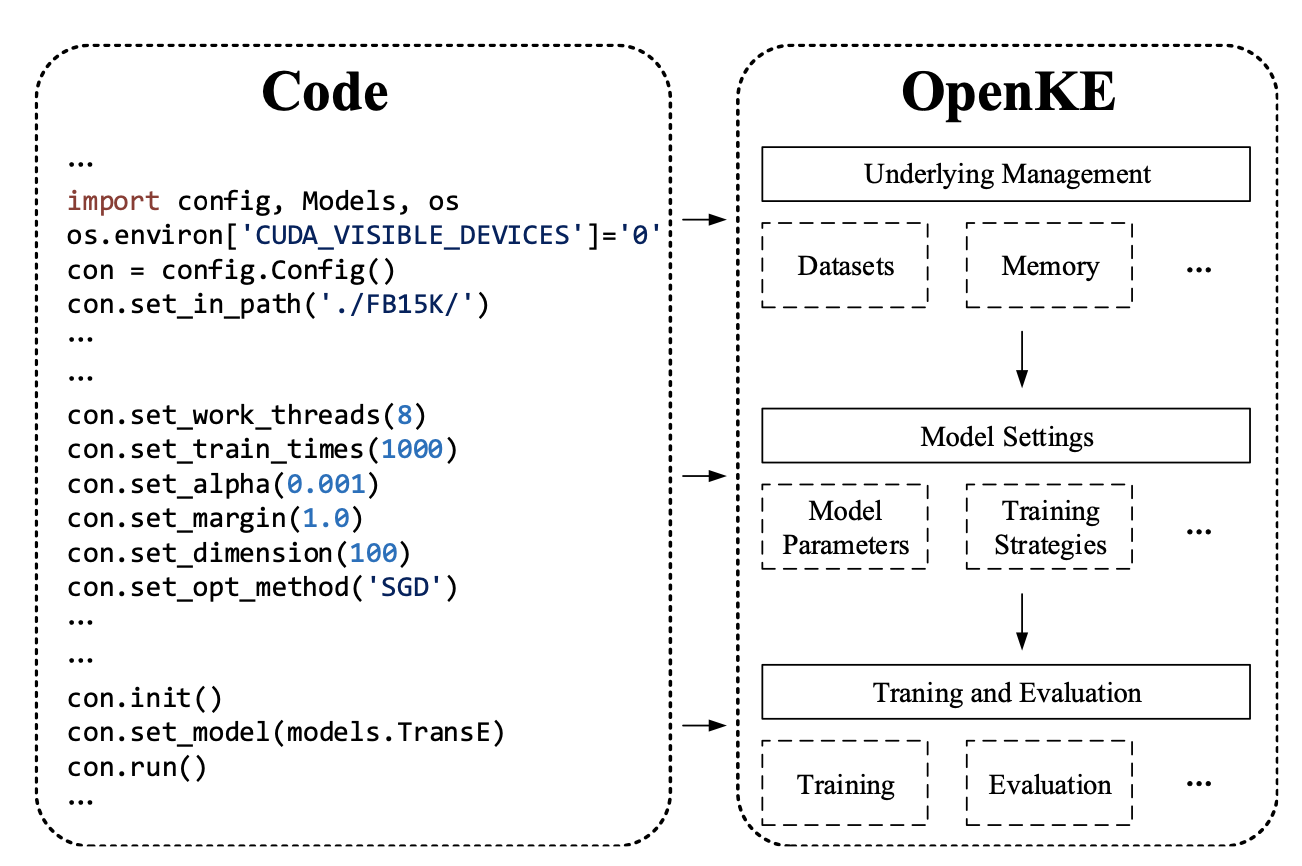

| OpenKE: An Open Toolkit for Knowledge Embedding EMNLP 2018 Xu Han, Shulin Cao, Xin Lv, Yankai Lin, Zhiyuan Liu, Maosong Sun, Juanzi Li [PDF] [Code] We propose an efficient open toolkit OpenKE for knowledge embedding. OpenKE builds a unified underlying platform to organize data and memory. It also applies GPU learning and parallel learning to speed up training. |